CIS 9615. Analysis of Algorithms

Basic Concepts

1. Solving problem with algorithm

Problem: specification of (1) input domain, (2) output range, and (3) mapping from each input to an output.

Algorithm: a (finite and predetermined) sequence of (directly executable or already defined) computational steps that produces an output for a valid input in finite time.

Desired properties of algorithm, as a solution to a problem:

- correctness: satisfying problem specification

- efficiency: low time and space expense

- simplicity: conceptual naturalness and length of description

Compromises are usually needed among these factors and others, depending on the practical application.

Programming: to implement an algorithm in a computer language.

2. Time efficiency analysis

The actual time used by an algorithm depends on:

- the problem-solving method of the algorithm,

- the concrete problem instance it is given,

- the software and hardware in which the algorithm is implemented and executed.

To compare the efficiency of different algorithms, several simplifications are applied:

- assuming constant time cost for each type of computational step, then only count the number of steps,

- measuring the size of instance, usually by length of description,

- for a given size, only considering the worst case (or average case, best case) situation,

- measuring the efficiency (time complexity) of an algorithm in a situation as a function of instance size,

- only considering how fast the function value increases as size increases,

- classifying functions into categories by order of growth.

Remember: in this process there are many assumptions made and many factors ignored!

The asymptotic efficiency of algorithms, represented by a simple function g(n):

- Θ(g(n)) = {f(n) : there exist positive constants c1, c2, and n0 such that 0 ≤ c1g(n) ≤ f(n) ≤ c2g(n) for all n ≥ n0} — g(n) is an asymptotic bound of f(n).

- O(g(n)) = {f(n) : there exist positive constants c and n0 such that 0 ≤ f(n) ≤ cg(n) for all n ≥ n0} — g(n) is an asymptotic upper bound of f(n).

- Ω(g(n)) = {f(n) : there exist positive constants c and n0 such that 0 ≤ cg(n) ≤ f(n) for all n ≥ n0} — g(n) is an asymptotic lower bound of f(n).

- o(g(n)) = {f(n) : for any positive constant c > 0, there exist a constant n0 > 0 such that 0 ≤ f(n) ≤ cg(n) for all n ≥ n0} — g(n) is a upper bound of f(n).

- ω(g(n)) = {f(n) : for any positive constant c > 0, there exist a constant n0 > 0 such that 0 ≤ cg(n) ≤ f(n) for all n ≥ n0} — g(n) is a lower bound of f(n).

The growth order of a function is usually written as f(n) = F(g(n)), which actually mean f(n) ∈ F(g(n)). Intuitively, the five notations correspond to relations =, ≤, ≥, <, and >, respectively, though not all functions are comparable in growth order.

To decide the relation between two functions:

- f(n) = o(g(n)) if and only if Lim[f(n)/g(n)] = 0.

- f(n) = Θ(g(n)) if and only if Lim[f(n)/g(n)] = c (a positive constant).

- f(n) = ω(g(n)) if and only if Lim[f(n)/g(n)] = ∞.

Furthermore, in the first two cases f(n) = O(g(n)), and in the last two, f(n) = Ω(g(n)).

The major growth orders, from low to high, are:

- constant: Θ(1)

- logarithmic: Θ(logan), a > 1

- polynomial: Θ(na), a > 0 — further divided into linear (Θ(n)), quadratic (Θ(n2)), cubic (Θ(n3)), and so on

- exponential: Θ(an), a > 1

Asymptotic notions can be used in equations and inequalities, as well as in certain calculations. For example, when several functions are added, only the one with the highest growth order matters.

3. Analysis of recurrences

An algorithm is usually analyzed by "Divide-and-Conquer": analyzing the parts recursively, then adding the costs together.

Recursion: part of a problem is a simpler problem of the same type.

Assume the algorithm

- directly solves problems instances in constant time if their size is smaller than a constant c,

- otherwise uses D(n) time to divide a problem into number a of smaller subproblems of the same type, each with 1/b of the original size,

- combines their solutions in C(n) time to get the solution for the original problem,

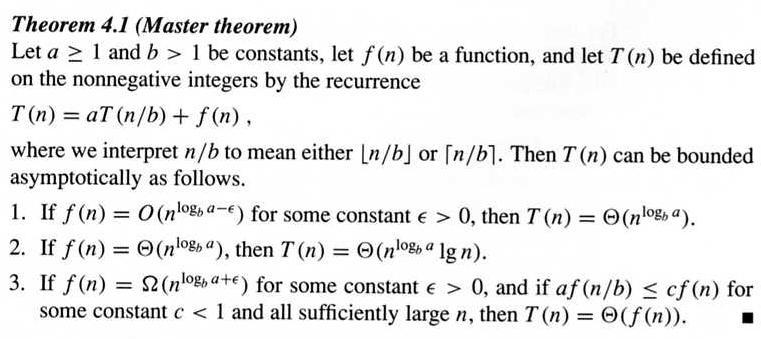

then the algorithm's cost follows the following recurrence:

To solve a recurrence:

- substitution method: use mathematical induction to prove a function that was guessed previously;

- recursion tree method: represent the equation as a tree to reveal the function — the height of the tree is logbn, and the number of leaves is alogbn (same as nlogba);

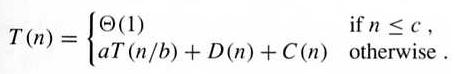

- the master method:

In the recursion tree, the three cases correspond to different relative cost spent on root/leaves.

As a special case, when a = b, logba = 1, and there are n leaves. The above results are simplified to (1) Θ(n), (2) Θ(nlgn), and (3) Θ(f(n)), respectively.