CIS 203: Artificial

Intelligence

Fall Semester 2001

Professor: Dr. Pei Wang

Report on Autonomous Vehicle

Systems

By

James Laurence

Table of

Contents

Autonomous Vehicle

Systems

Motivation

for Autonomous Vehicle Systems:

In many situations encountered in life, it is undeniable that direct human intervention is unnecessary or even undesirable. Environments that are hostile to humans such at the vacuum of space or the high pressures of the ocean floor represent real dangers to human exploration, which, if possible, should be avoided. In addition, humans often place themselves in situations that are hazardous. Examples of such situations span from engaging in drinking and driving activity all the way to the extreme of engaging in combat against an enemy, such as in aircraft combat. If such a means could be developed to avoid or suppress the human element in these activities, many lives could be saved. This is one of the motivations behind the construction and implementation of autonomous systems. There are also cases in which human involvement in certain activities is clearly of no life threatening risk but could certainly be seen as tedious or physically exhausting. Examples of such activities are landscaping work (grass cutting, bush trimming) and carpet vacuuming. Again, autonomous systems are the solution to these cases. By giving people time to do what they enjoy instead of spending time on mundane chores, (I would argue that most people don’t enjoy vacuuming for instance), autonomous systems can improve the quality of life.

Some of the Technology Used in Autonomous Vehicle

Systems:

Autonomous Navigation

Systems:

Practical Applications / Examples of Some Autonomous

Vehicles:

ANDI and CIMP

ANDI

(Automated Nondestructive Inspector) and CIMP (Crown Inspection Mobile

Platform) combined, form an alternative to traditional aircraft inspection. As

stated in the introduction, one of the more noble goals of constructing

autonomous systems is to improve the quality and safety of human life.

Traditional aircraft inspection is conducted by human workers who, by the nature

of the occupation, are in danger of serious bodily injury.

The autonomous aircraft inspection vehicle ANDI is the first part of the answer to this problem. ANDI is used primarily to detect minor skin problems with an airplane. At present, ANDI does not have a high enough resolution on its cameras to detect serious structural faults in an aircraft (Siegel 3). ANDI is used merely to conduct what Siegel refers to “opportunistic kinds of visual inspection” which consists mostly of dents in the aircraft (3).

The second part of the inspection team is CIMP. CIMP has an array of sensors to scan the aircraft with. Correspondingly it uses a vast amount of image enhancement software to visually identify most kinds of defects in an aircraft (4). Without going into the details about how CIMP does this, some of the features of the system are as follows: image understanding, edge detection and feature vector calculation (9). What all these enhancement algorithms results in is that CIMP us almost as reliable in finding cracks and scratches and most importantly in discerning between the two (9). With even more software, the CIMP design team hopes to make it as dependable as a human inspector. Together, ANDI and CIMP promise to make human inspection of aircraft obsolete.

Sage

int

calcspeed(int dir, int maxSpeed, int cycleTime)

{

/*

update virtual sonar in the travel direction */

updateVirtualSonars(dir);

/*

if close obstacle, then stop */

if

(frontBlocked()){

normal_acc();

return

(0);

}

/*

else if middle distance obstacle, proportional speed */

else

if (forwardObstacle()){

middle_acc();

return

(calcSpeedObstacle(maxSpeed-100));

}

/*

else if NO obstacles for a long distance, full steam ahead !

*/

else

if(clearTowardGoal()){

smooth_acc();

return

(maxSpeed);

}

/*

finally, if path clear but far obstacle, reasonable speed */

else

return (maxSpeed - 100);

}

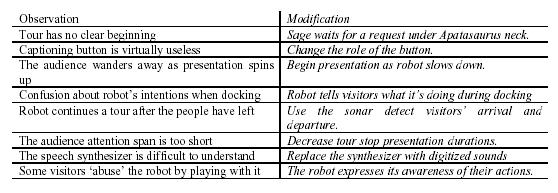

Table 1 - Ways of promoting human/robot interaction:

What must be done in the Field of Autonomous

Systems?

Based on the information above on autonomous systems, there are two things that must be done so that the field us seen as a serious discipline. After all, successful autonomous systems must be more than just toys. This goal can be achieved in two ways.

First, autonomous systems must be proven to perform better than their human counterpart in order to justify their use. It is not enough to design an ‘automatic pilot’ for a car, for example, if the system cannot handle ‘stop and go’ situations since such driving is more difficult than that of the highway. This is justified as follows. A system to control the car on the highway is great but if the use of the system is indeed to prevent accidents due to intoxication for instance, the system must do all the driving. In addition, we cannot rely on an intoxicated passenger to give accurate directions home. For this reason, such a navigational control system would certainly need to have the assistance of some external navigational system (such as GPS) in order to reliably get the passenger home safely.

Second,

autonomous systems, when needed, must incorporate the major aspects of human

behavior. One of the reasons some humans dislike computers so much is that they

are impersonal. Loosing all of one’s work only to have the computer reply ‘stack

overflow’ or ‘this program has performed an illegal operation’ for instance, is

not a good way to establish good relations between computer and user. Therefore,

more cooperation needs to be done between sociologists, psychologists and the

system designers to ensure an acceptable (i.e. friendly) user interface. Only when

these ideas are implemented can effective autonomous systems be realized.

Summary of Autonomous Vehicle Systems:

From the discussion

above, I hope to convey my feeling that the field of autonomous vehicle systems

shows much promise. While there is certainly still much work to be done in

autonomous systems, I would argue that autonomous systems has an advantage over

other areas of AI in terms of how soon the general public can expect to see

practical applications emerge from this field. Other areas of AI research,

particularly speech recognition/production, try in some way to replicate human

brain activity. We need to know to some degree how the brain works in order to

teach a computer to understand speech. Such an understanding is not absolutely

necessary to teach a computer how to drive a car, unless of course we wish to

teach it “road rage”! While designing a computer system to flawlessly drive a

vehicle or perform some other task is certainly not trivial, it the main

constraint of autonomous systems is the

current technology available. For this reason it is hard to imagine that the

near future will not see everyday implementations (such as the autonomous

lawnmower or autonomous vacuum cleaner) of autonomous systems.

Works Consulted

Ackerman, Robert. “Processing technologies give robots the upper hand.” Signal. Jul.

2001: 17-20.

Adams, Jarret. “Beautiful Vision.” Red Herring. Aug. 2000.

Al-Shihabi, Talal. “Developing Intelligent Agents for Autonomous Vehicles in Real-Time

Driving Simulators.” Online. Internet. 17 Oct. 2001. Available:

http://www.coe.neu.edu/~mourant/velab.html

Chen, Mei, Todd Jochem, and Dean Pomerkeau. “AURORA: A Vision-Based Roadway

Departure Warning System.” Carnegie Mellon University. Pittsburgh, PA, 1997.

Foessel-Bunting. “Radar Sensor Model for Three-Dimensional Map Building.” Carnegie

Mellon University. Pittsburgh, PA, 2000.

Nourbakhsh, Illah R. “An Affective Mobile Robot Educator with a Full-time Job.”

Carnegie Mellon University. Pittsburgh, PA, 1999.

Rosenblatt, Julio K. “DAMN: A Distributed Architecture for Mobile Navigation.” Diss.

Carnegie Mellon University. Pittsburgh, PA, 1997.

Siegel, Mel and Priyan Guanatilake. “Remote Inspection Technologies for Aircraft Skin

Inspection.” Carnegie Mellon University. Pittsburgh, PA, 1997.

Simmons, Reid and Apfelbaum. “A Task Description Language for Robot Control.”

Carnie Mellon University. Pittsburgh, PA, 1998.

West, James. “Computer Vision.” University of Sunderland. Sunderland, UK, 1997.